Self-Driving Car Accidents

Did you know that Ohio is scheduled to become one of the largest test areas for self-driving cars? In June, 2018, then-Governor John Kasich broke ground on a testing center in Marysville, Ohio. The center, run by The Ohio State University, is three times larger than Disneyland, and it includes a number of test tracks where people can experiment with self-driving cars. But that’s not all. Four cities in Ohio have agreed to allow self-driving cars on the road alongside other motorists. This has the potential to become disastrous, as far as autonomous vehicle accidents are concerned.

Self-Driving Cars in Ohio

As of December 2018, Columbus, Athens, Marysville, and Dublin, Ohio have agreed to allow self-driving cars on the road.. A handful of other cities, including Cleveland, Youngstown, and Springboro, have expressed their interest in this program, which is run by DriveOhio. This same program is responsible for the Marysville self-driving car testing center. Although it isn’t clear exactly when these self-driving cars will appear on the roads, when they do, there will be roughly 1,200 of them out there circulating alongside traditional vehicles.

On top of this, the city of Columbus has launched an autonomous vehicle-run shuttle service. Called May Mobility, it consists of three self-driving vehicles that make a loop around the Scioto Mile from 6 am to 10 pm every day. Each vehicle has four seats, and a human back up driver, just in case something goes wrong. While this is an interesting leap forward technologically, it’s very risky for the other drivers on the road.

Self Driving Vehicle Accidents

Self driving cars seems to be a great concept, and the goal is to provide convenience. However, there are problems associated with these vehicles, and accidents are always a concern. Listed below are a few of the problems associated with the safety of self driving cars.

Misinterpretation of Human Drivers

One significant challenge with self-driving car technology lies in its ability to accurately interpret the behavior of human drivers on the road. Autonomous vehicles rely on sophisticated algorithms to predict and respond to the actions of human drivers.

The unpredictable nature of human behavior, including sudden lane changes, aggressive driving, or unexpected stops, can pose difficulties for self-driving cars. When the technology struggles to predict and adapt to these human behaviors, it can lead to accidents involving both autonomous and traditional vehicles.

Sensor Failures

Self-driving cars are loaded with a multitude of sensors, including cameras, LiDAR, radar, and ultrasonic sensors, to perceive their surroundings. These sensors provide crucial data for navigation and decision-making.

Sensor failures, such as camera malfunctions or radar errors, can impair the vehicle’s ability to accurately detect obstacles, other vehicles, or pedestrians. This greatly increases the likelihood of a self-driving car accident.

Software Glitches

Autonomous vehicles rely on complex software systems that control various aspects of their operations, from navigation and decision-making to communication with other vehicles.

Software glitches or bugs can manifest as errors in the code, causing unexpected behaviors or malfunctions in the vehicle’s operation. These glitches can range from minor hiccups that do not affect safety, to more critical system failures that result in serious crashes.

Lack of Human Intervention

One of the key challenges of self-driving vehicles is the expectation that they can operate, in all situations, without human intervention.

While autonomous systems are designed to handle a wide range of scenarios, they may still encounter unexpected and complex situations. In such cases, the technology may struggle to make safe decisions, potentially leading to self-driving car crashes. The ability for human intervention or oversight remains a crucial aspect of self-driving car safety.

Incomplete or Outdated Mapping Data

Self-driving cars rely heavily on detailed mapping data to navigate their environment accurately. However, mapping data can become incomplete, outdated, or inaccurate due to changes in road infrastructure or construction.

When autonomous vehicles encounter discrepancies between their mapping data and the current state of the road, it can lead to confusion or errors in route planning. This can potentially cause accidents involving a driverless vehicle.

Limited Ability in Adverse Weather

Driverless cars may face challenges when maneuvering in adverse weather conditions, such as heavy rain, snow, or fog.

Self-driving car technology, which primarily relies on sensors and cameras, may struggle to adapt to reduced visibility or slippery road conditions. This limitation can increase the risk of car accidents in adverse weather conditions, as the technology may not perform optimally in such challenging environments.

Driver Assistance Confusion

In some cases, drivers may confuse vehicles equipped with driver assistance features with fully autonomous vehicles. This misunderstanding can lead to accidents when drivers overestimate the capabilities of their vehicles and fail to remain attentive behind the wheel. Clear communication and education about the limitations of driver assistance systems are crucial to prevent such confusion and accidents.

Intersection Complexities

Intersections represent one of the most complex driving situations for self-driving cars. Autonomous vehicles must navigate intricate traffic patterns with other vehicles and pedestrians at intersections.

Issues may arise when self-driving cars misinterpret signals, misjudge the intentions of other drivers, or make incorrect situational decisions, potentially resulting in accidents.

Unpredictable Human Behavior

Human drivers often engage in unpredictable behavior on the road, such as sudden lane changes, aggressive maneuvers, or unexpected stops. Self-driving cars may struggle to anticipate and respond effectively to such behavior, increasing the risk of accidents involving autonomous vehicles.

The challenge lies in developing self driving capabilities that can accurately predict and adapt to the diverse and sometimes erratic behaviors of human drivers.

Regulatory and Liability Issues

Accidents involving self-driving cars can raise complex legal and liability issues. Determining responsibility and liability for accidents may become a legal challenge as self-driving technology becomes more widespread.

Regulatory frameworks and laws surrounding autonomous vehicles are still evolving and may vary from one jurisdiction to another. This creates additional complexities in the aftermath of self-driving car accidents.

If you’ve been in an accident involving a driverless car, you need to call me as soon as possible. The laws surrounding these vehicles are new, and constantly evolving. We can discuss your situation and see how we move forward to obtain financial compensation.

Excitement About Fully Self Driving Cars

Of course, there is excitement behind the evolution of self driving cars. They are advertised with a lot of great promises, though they come with a lot of “buts”.

Reduce Human Error

Self-driving cars are expected to reduce accidents caused by human error, such as distracted driving, speeding, or impaired driving. Autonomous cars rely on automated driving systems that can make quicker and more accurate decisions, potentially leading to safer roadways

However, there is a concern that if these automated systems fail or make incorrect decisions, it may result in a self-driving car accident. Technical glitches or sensor malfunctions could result in the inability to correctly identify hazards or make safe driving decisions.

Advanced Sensors and Perception

The autonomous cars are equipped with advanced sensors, such as LiDAR, radar, and cameras, that provide a 360-degree view of the vehicle’s surroundings. This technology enables autonomous cars to detect and respond to objects, pedestrians, and other vehicles with precision.

If these sensors become obstructed, damaged, or fail to function correctly, self-driving cars may misinterpret their surroundings, potentially leading to self-driving car crashes. Overreliance on sensor technology without a backup plan could pose serious risks.

Constant Vigilance

Autonomous cars are designed to maintain constant vigilance and awareness of their surroundings, while never getting fatigued or distracted during a journey. This feature is expected to contribute to self-driving car safety.

However, the expectation of constant vigilance may lead to complacency among human occupants. They might assume that the autonomous car is infallible, potentially disengaging from the driving tasks entirely. In the event of a sudden need for human intervention, this complacency could result in delayed reactions and accidents involving self-driving cars.

Reduced Traffic Congestion

Self-driving cars can communicate with each other , which in theory should allow for coordinated traffic flow and reduced traffic. This feature has the potential to make daily commutes more efficient.

Over-reliance on this communication technology could pose a danger if a self-driving car accidently misinterprets or loses communication with other vehicles or traffic systems. In such cases, the anticipated reduction in traffic congestion may not materialize.

Automated Driving Systems

Autonomous cars are equipped with advanced automated driving systems that can take over various driving tasks, including acceleration, braking, and lane-keeping. This technology is expected to improve overall road safety.

The danger lies in the transition between automated and manual driving. If a driver has not maintained proficiency in manual driving skills and must take control in an emergency, they may not be adequately prepared to avoid a self-driving car crash. The handover of control between the car and the driver must be seamless to prevent accidents involving self-driving cars.

No Need for a Steering Wheel

Some self-driving car concepts eliminate the need for a steering wheel altogether, as they are designed for fully autonomous operation. This feature represents a significant departure from traditional vehicles and reflects the potential for complete automation.

In emergencies or situations where manual control becomes necessary, the absence of a steering wheel could leave passengers without a means to take immediate action. This limitation may lead to accidents involving self-driving cars when human intervention is required.

As noted, self driving cars are not without complications, which poses danger on the road.

Fatal Uber Accident

Everyone’s fear of self-driving cars came true in March of 2018 when a woman died due to an accident with one of these vehicles. The woman, Elain Herzberg, a 49-year-old resident of Tempe, Arizona, was crossing the road in front of a self-driving Uber vehicle when the crash occurred. The vehicle failed to make a required emergency stop when she stepped out in front of it because its safety features (which included an emergency breaking procedure) were turned off. Although a human driver was behind the wheel, the car was being operated by the computer. Due to the lack of the emergency breaking features, the alarm that would have alerted the driver to take over and hit the brakes didn’t go off. As a result, Herzberg was killed. This shouldn’t have happened. Self-driving vehicles need to undergo more rigorous testing before they are unleashed on public roadways.

Additional Accidents

On top of the Tempe, Arizona accident, there have been two other related fatalities in recent years. The first took place in Williston, Florida in 2016. The man behind the wheel of the self-driving car, a Tesla Model S, didn’t stop when a tractor-trailer turned in front of it. The driver, Joshua Brown, was in the car when it went underneath the tractor-trailer at full speed. He was killed instantly. The second incident occurred in Mountain View, California in 2018. That driver was in a self-driving Tesla Model X. The car’s computer, which was doing the driving, became confused by the painted road construction lines on Highway 101. It crashed into two other vehicles and then burst into flames, killing the driver. The people in the other two vehicles survived, but their lives were negatively impacted by the accident.

New Element of Danger on the Streets

It’s clear that these self-driving cars, while supposedly the “way of the future” are also hazardous to other drivers on the roads. Too many things can go wrong. The emergency braking features could be turned off. The computer that drives the car could become confused and swerve into another lane or even oncoming traffic. Since the average driver can’t tell which vehicles are self-driven (due to the human “back up driver”), these cars create a precarious situation. They are a potential danger to everyone around them, including pedestrians. Until the technology has advanced to the point that it’s fool-proof, the potential for danger is very high.

Hurt In A Collision With a Self-Driving Car?

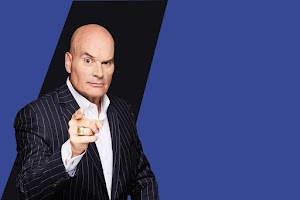

If you were injured in a car accident caused by a self-driving autonomous vehicle, you have options. The other driver, which in this case, includes the vehicle’s manufacturer, may be at fault. Call me as soon as possible to discuss your claim. As your injury attorney, I’ll work on your behalf to ensure you are fairly compensated, and I’ll Make Them Pay!®

ASK TIM A QUESTION

NO COST TO YOU!

There's the Tim Misny you see on the billboards and on TV, but there's another Tim Misny that I know up close and personal.

The Tim Misny I know is genuinely passionate about helping the hard working little folks who get pushed around the big folks. He actually, really honestly cares about people who run into bad luck at no fault of their own. Tim has quietly, out of the sight of cameras and with no fanfare shared generously with the marginalized in our society; single moms, Veterans and others without expecting anything in return.

He calls them like he sees them and you wouldn't want to take your troubles to anyone else.

Thank you Tim for being a light in this community!

City, State and Above State Level Situation .

Pro Se !

Thank you Tim

father figure one day. Tim loves nature & shares the true beauty of nature with how he raises his kids. From biking through trails & having his kids learn how to nurture the family garden - he teaches valuable lessons on how to appreciate the world we live in. I know that Tim is just a phone call away & always urgent to responding no matter the situation. He has given me some of the greatest advice & continously throwing positive energy my way! I'm honored to know him & be a friend of his. He is the true practioneer of changing lives & paying it forward. He has taught me so much in just a short amount of time. Tim Misny, a man that wakes up every single day to make a difference!

After I helped him through the checkout and he left, many different employees at the garden center asked me if I knew who that man was. I did not. They told me, “That’s Tim Misny!” Once I figured out “who” Tim Misny was, my confidence grew. It was a pivotal moment. One in which steered the rest of my life. His words stuck with me. I worked hard and obtained my Associate’s Degree in Criminal Justice. I was hired by a local Law Enforcement Agency at the age of 21. I was sent to the Ohio State Highway Patrol Academy, which was an extremely tough place to be, to say the least. At the Academy, they try to break those who don’t have a determined spirit. On days I wanted to quit, I remembered Tim’s words. Maybe it was fate, maybe it was random chance.... I’ll never know But, Tim Misny helped me have the confidence and determination that was necessary to be where I’m at today. I’ve been a law enforcement officer for over 16 years. I’ve told this story to many of my coworkers.

Tim, you’ll never understand how your words that spring day helped shape my future. Your words enabled me to provide a great life for myself and my family. I’m forever grateful.

Mike

The personal attention and clarity of the matter and specifics, gave me a state of comfort and satisfaction that I could have found nowhere else! I will be forever grateful to have Tim Misny as my attorney and my friend!

I am a businessman in the Greater Cleveland area and have directed various members of my staffs as well as personal friends to Tim for solutions to their personal injury case needs. All my referrals have been more than pleased with the service and personal treatment they have received from Tim and his staff.

I strongly recommend Tim Misny and his associates should you ever need the finest legal services available in Ohio! Trust is his number one priority, trust in knowing that you will be his client second, his friend first!